Career Profile

I offer freelance consulting services in Robotics and Artificial Intelligence. I possess strong programming skills in C, C++, and Python, with expertise in Robot Operating System (ROS/ROS2) architecture design, ROS-based application development, system integration, and cloud robotics frameworks. Over my career, I have delivered numerous PoCs and developed robotic products and applications for retail and manufacturing industries. Additionally, I have trained and mentored new recruits, helping them gain proficiency in robotic technologies and applications.

Experience

Worked at Accenture as a Technical Architect Associate Manager, where I was responsible for designing technical architectures for robotic systems involved in pick-and-pack, material handling, inspection, and their integration with cloud platforms and end-user applications. In addition to leading and mentoring my team, I collaborated closely with solution architects to develop RFP responses for robotics-related projects.

Served as a Team Lead (CTO-Research Engineer) for Robotic Systems at Tata Consultancy Services (TCS). My role involved creating on-board software architecture and developing robotic applications for various real-world use-cases.

Worked as a developer for inventory management and order management system using IBM Sterling.

Projects

Developed a customer engagement application integrating ChatGPT with Spot robot from Boston Dynamics. In lab demonstrations, the robot dog welcomes visitors, introduces its capabilities, and showcases various demos. It also performs autonomous inspection of pressure gauges in a mock factory premise.

The demo presented a Smart Manufacturing System concept that can learn new skills and perform new tasks. The use-case involved one component of smart manufacturing line, a pick-and-pack robot working based on orders generated from a UI. If the source (product) and destination (package) are new and unknown for the robotic system, it adapts to the new skill of object detection by generating a large amount of synthetic data using Blender and training an AI model using Google’s Vertex AI. Once the skill is ready, the model and execution pipeline are tested virtually using Gazebo. Once e2e testing is done in simulation, it can be used by the real robot for execution, which fulfils the order based on the combination of products to be packed. The system can also integrate with Google MCE and MDE to send the data for system’s various stages of execution, store it in BigQuery and use Google’s looker dashboard to analyze the KPIs to improve the overall equipment effectiveness.

This project involved the integration of two robotic arms, a rover, and an IR sensor with a PLC-controlled conveyor system within a discrete manufacturing line at MARS. Using quality checks performed at multiple QC stations, the first robotic arm sorted packets either into a scrap bin or directed them further along the production line. Another robotic arm picked the packets from the production line and placed them on the rover, which then transported the sorted packets to their final drop location.

Developed a drone-enabled visual inspection system for automated damage detection. A drone with an edge device running a deep learning model was integrated via APIs and triggered to autonomously navigate around vehicles, streaming video for real-time scratch/dent detection. Results were uploaded to Azure and displayed to end-users through a web application.

This interactive demo was created to warmly welcome guests visiting the lab. It utilized a face recognition module to identify visitors, followed by a text-to-speech system that greeted each guest by name. A Sawyer robot, equipped with a custom-designed, 3D-printed gripper, then held a marker and wrote a personalized welcome message on a canvas.

This is an in-house developed fork-lift based Autonomous Mobile Robot (AMR) developed at TCS and deployed in a customer’s warehouse. The pallet-picking AMR autonomously navigates by creating a map of its environment, localizes itself, and moves pallets to designated drop locations based on orders received from the enterprise system. It also operates according to user-defined zones, such as speed limit, no-go and blink zones, to ensure safe and efficient workflow.

This differential drive Autonomous Mobile Robot (AMR) developed at TCS is designed to create a map of

its environment, navigate autonomously, and localize itself, while operating based on user-defined

zones.

Additionally, it features a web-based user interface that allows end users to control and monitor the

robot’s activities.

This product was selected as ‘Torchbearer of

Innovation in TCS’ at TCS Innovista 2020.

Integration of NAO robot with TCS chatbot KNADIA and google cloud ASR to make it respond to queries regarding TCS policies. This was presented at various innovation forums and was also showcased at IEEE Ro-MAN 2019.

Hybrid picker-to-part web-based order fulfilment using UR5, UR10, and Turtlebot. Orders were placed for two picking stations, where robotic manipulators picked objects while the Turtlebot carrying the box moved between stations. Manipulators retrieved items from bins as per the order and placed them in the box. Designed the system architecture and developed a ROS node for Turtlebot navigation and coordination with the manipulators.

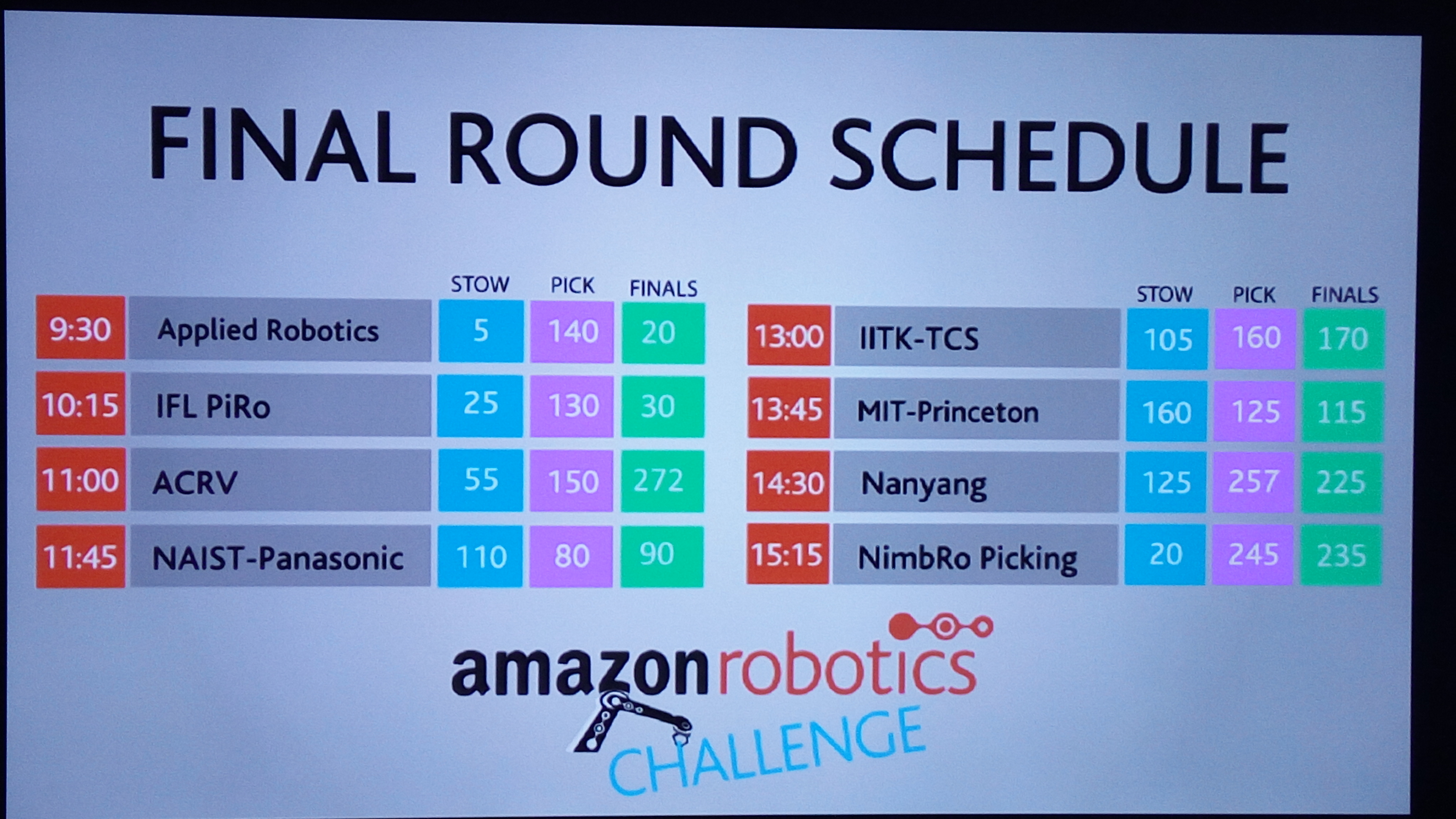

I was part of the team IITK-TCS

and developed SMACH-ROS architecture for the system. Given a list of objects for picking, the task was

to autonomously pick them from bin and place it in the respective packing box. As shown in the VIDEO it was implemented using SMACH-ROS

control architecture, object detection, 3d matching for pose estimation, robot planning with dynamic

updates in Octomaps for collision avoidance .

The video is of pick task from the competition.

The purpose of the POC was to demonstrate robot fleet control using ROS based cloud robotics platform Rapyuta. The top level Global planner runs on a Cloud Server which provides the path information to robots which used onboard navigation stack to follow the path. The current location of the robot is updated on the display unit available on cloud server. Obstacles can be created dynamically leading to generation of new paths, which is passed to robots in real time.

This was the very first work assigned to me once I joined TCS Robotics Lab. Developed realtime plot using gnuplot C++ library. The purpose was to visualise the map using odometry data. Algorithm with visualisation is present at ‘prasun’ branch in the repo.

Publications

Awards and Certifications